Israeli military develops AI tool using Palestinian surveillance data

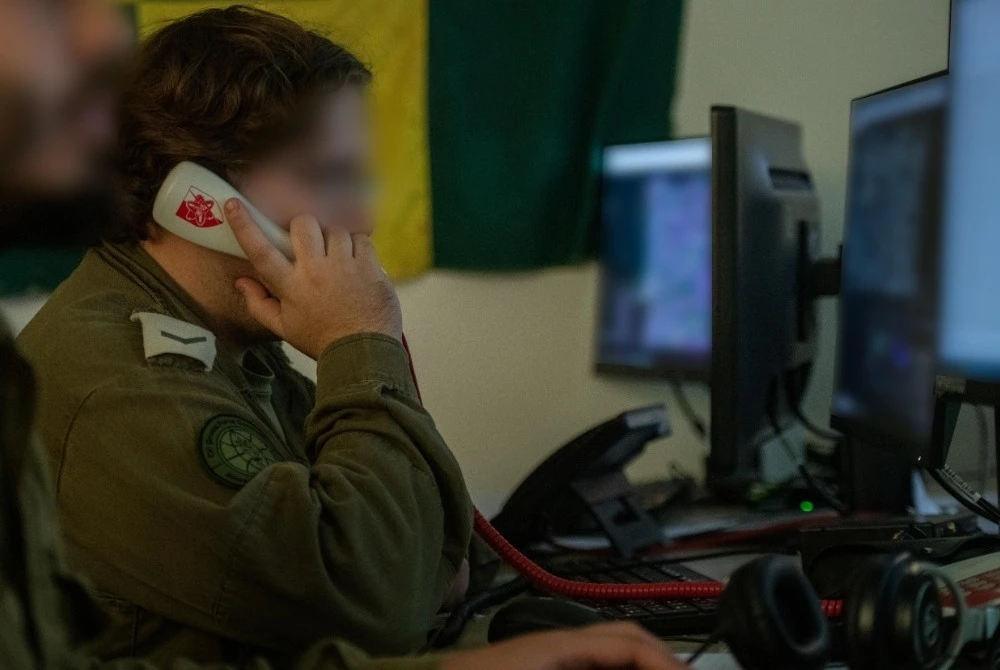

Soldiers of an Israeli army Military Intelligence Directorate team for the Golani Infantry Brigade are seen working at computers, in a handout photo published November 12, 2023 (Israeli army Photo)

Soldiers of an Israeli army Military Intelligence Directorate team for the Golani Infantry Brigade are seen working at computers, in a handout photo published November 12, 2023 (Israeli army Photo)

The Israeli military has built an artificial intelligence (AI) tool using vast amounts of intercepted Palestinian communications, a joint investigation by The Guardian, +972 Magazine, and Local Call has revealed.

The tool, developed by Unit 8200, part of Israel’s intelligence agency, is designed to analyze intercepted conversations and text messages, raising concerns over surveillance and the ethical use of AI in intelligence operations.

AI surveillance in the occupied territories

According to sources familiar with the project, Unit 8200 trained the AI model to understand spoken Arabic using extensive surveillance data collected from the occupied Palestinian territories.

The model, described as a chatbot-like system, enables intelligence officers to query vast amounts of collected data for real-time insights into individuals under surveillance.

The development of the AI model reportedly accelerated following the outbreak of the war in Gaza in October 2023. It remains unclear whether the system has been fully deployed.

A large-scale intelligence effort

Unit 8200, often compared to the U.S. National Security Agency (NSA), has a history of using AI-powered tools for intelligence gathering.

The AI systems, including Gospel and Lavender, played key roles in identifying targets for airstrikes in Gaza, facilitating the Israeli military’s rapid bombardment of thousands of locations.

During a military AI conference in Tel Aviv last year, former military intelligence technologist Chaked Roger Joseph Sayedoff acknowledged that the project required “psychotic amounts” of data, claiming that the goal was to create the largest dataset possible from Arabic-language communications.

Several former intelligence officials confirmed the initiative’s existence and described how earlier machine learning models were used before launching the more advanced system.

“AI amplifies power,” one source familiar with the project stated. “It’s not just about preventing attacks. I can track human rights activists, monitor Palestinian construction in Area C [of the West Bank]. I have more tools to know what every person in the West Bank is doing.”

Ethical and legal concerns

Human rights advocates have warned that integrating AI into military intelligence raises significant risks, including bias, errors, and a lack of transparency.

Zach Campbell, a senior surveillance researcher at Human Rights Watch (HRW), criticized the potential use of large language models (LLMs) to make decisions about Palestinians under Israeli occupation. “It’s a guessing machine,” Campbell said. “And ultimately, these guesses can end up being used to incriminate people.”

Nadim Nashif, director of 7amleh, a Palestinian digital rights organization, argued that Palestinians have effectively become “subjects in Israel’s laboratory” for AI development, claiming that such technologies reinforce surveillance and control mechanisms.

Use of AI in military operations

Reports indicate that AI has played a central role in Israeli military operations, particularly in Gaza.

According to sources cited by The Washington Post, AI-powered tools allowed the Israeli military to rapidly process intelligence and execute thousands of airstrikes in a matter of weeks.

AI models such as Lavender and Gospel were reportedly used to analyze intercepted communications and identify potential targets. The system processed vast amounts of data from drones, fighter jets, and phone intercepts, cross-referencing intelligence sources to determine targets for military strikes.

However, former military officials have expressed concerns about the reliance on AI in warfare. Some claimed that the speed and automation of target selection reduced the time for human oversight, leading to mistakes.

In one reported case, an Israeli airstrike in Gaza in November 2023—believed to have been influenced by AI-generated intelligence—resulted in the deaths of four civilians, including three teenage girls.

Lack of oversight and accountability

Despite concerns about the ethical implications of AI in military surveillance and warfare, the Israeli army has declined to provide details about the new AI system’s safeguards.

“Due to the sensitive nature of the information, we cannot elaborate on specific tools, including methods used to process information,” an Israeli army spokesperson said.

However, they maintained that the military follows rigorous procedures when deploying AI. “The Israeli army implements a meticulous process in every use of technological abilities,” the spokesperson added. “That includes the integral involvement of professional personnel in the intelligence process in order to maximize information and precision to the highest degree.”

Global implications of AI in military intelligence

Israel is not alone in integrating AI into intelligence operations.

The U.S. Central Intelligence Agency (CIA) has reportedly deployed a ChatGPT-like tool to analyze open-source data, while the UK’s intelligence agencies are also developing AI-driven surveillance models.

However, experts argue that Israel’s approach differs due to the vast scale of its surveillance over Palestinians. A former Western intelligence official noted that Israel’s intelligence community appears to take “greater risks” with AI implementation compared to other democracies with stricter oversight on surveillance practices.

Future of AI in surveillance and warfare

The use of AI in military intelligence is expected to expand, raising concerns over privacy, accountability, and civilian safety. While AI can enhance intelligence capabilities, experts warn that the technology remains prone to biases and errors that can have severe real-world consequences.

Brianna Rosen, a former White House national security official, cautioned that AI’s predictive nature makes it difficult to verify its accuracy. “Mistakes are going to be made, and some of those mistakes may have very serious consequences,” she said.