NVIDIA unveils AI chatbot integration for RTX-powered systems

Nvidia is offering users the opportunity to test their new AI chatbot, Chat with RTX, which operates directly on your personal computer

Nvidia is introducing a new tool designed to encourage the use of its latest graphic processing units, GeForce RTX 30 Series and 40 Series cards, to operate an AI-driven chatbot offline on a Windows PC.

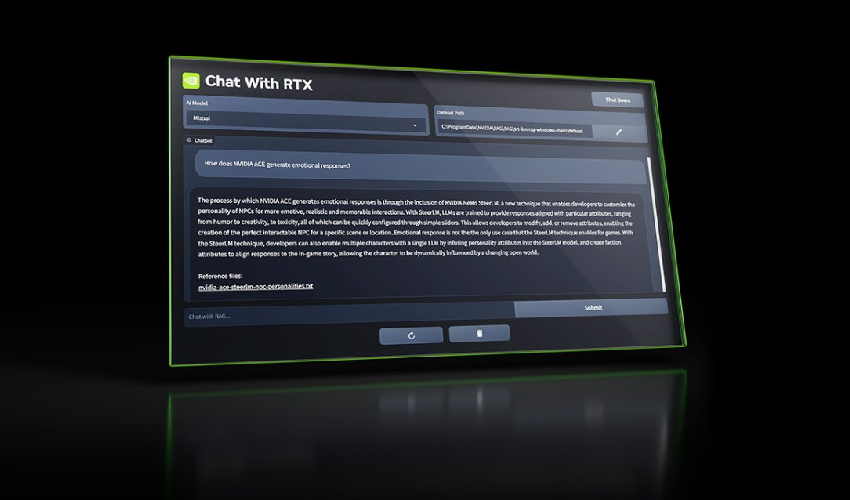

This tool, named Chat with RTX, enables users to personalize a GenAI model similar to OpenAI’s ChatGPT by linking it to documents, files, and notes for querying purposes. Instead of manually sifting through saved content, users can input queries directly. For instance, a user could ask, “What was the name of the restaurant my partner recommended in Las Vegas?” and Chat with RTX will search through designated local files to provide the answer in context.

While Chat with RTX primarily utilizes Mistral’s open-source model, it also supports various other text-based models, such as Meta’s Llama 2. Nvidia cautions that downloading all the required files will consume a significant amount of storage, ranging from 50 gigabytes to 100 gigabytes, depending on the selected model(s).

At present, Chat with RTX is compatible with text, PDF, .doc, .docx, and .xml formats. By directing the application to a folder containing supported files, users can load the files into the model’s fine-tuning dataset. Additionally, Chat with RTX can process the URL of a YouTube playlist to access transcriptions of the videos within the playlist, allowing the selected model to query their contents.

It’s important to note that Nvidia has outlined specific limitations in a comprehensive how-to guide.

Chat with RTX does not retain context, meaning that it does not consider previous questions when answering subsequent ones. For instance, if you inquire about a common bird in North America and then ask about its colors, Chat with RTX will not recognize that you are referring to birds.

Nvidia also acknowledges that the relevance of the app’s responses can be influenced by various factors, some of which are easier to manage than others. These factors include the wording of the question, the performance of the selected model, and the size of the fine-tuning dataset. Requesting information from a couple of documents is likely to yield better results than asking for a summary of a document or set of documents. According to Nvidia, response quality generally improves with larger datasets, as does pointing Chat with RTX to more content about a specific subject.

Therefore, Chat with RTX is more of a novelty than a tool intended for production use. Nevertheless, there is merit in applications that facilitate the local operation of AI models, which is an emerging trend.

In a recent report, the World Economic Forum predicted a “dramatic” increase in affordable devices capable of running GenAI models offline, including PCs, smartphones, Internet of Things devices, and networking equipment. The WEF cited clear advantages, noting that offline models are inherently more private, as the data they process never leaves the device they are running on. Additionally, they offer lower latency and are more cost-effective than cloud-hosted models.

Of course, making tools for running and training models more accessible also presents opportunities for malicious actors. A quick Google search reveals numerous listings for models fine-tuned on toxic content from unscrupulous sources on the web. However, proponents of apps like Chat with RTX argue that the benefits outweigh the risks. Only time will tell.

Source: Newsroom