Deepfake technology: Dual-edged sword of synthetic media

An AI illustration to show deepfake technology and the synthetic media. (Photo by DALL-E via OpenAI)

An AI illustration to show deepfake technology and the synthetic media. (Photo by DALL-E via OpenAI)

Deepfake technology, a sophisticated product of artificial intelligence (AI), is revolutionizing how media is created and consumed. By generating synthetic images, videos, and audio that can portray events or people in situations that never occurred, deepfakes present both opportunities and significant challenges.

Deepfakes represent a subset of the general category of “synthetic media” or “synthetic content”. Many popular articles on the subject define synthetic media as any media that has been created or modified through the use of AI machine learning (ML), especially if done in an automated fashion.

As the technology becomes more accessible, it raises critical questions about its ethical implications, particularly in the context of media, politics, and security.

Origins and evolution of deepfake technology

The term “deepfake” is a portmanteau of “deep learning,” a subset of machine learning involving multiple layers of processing, and “fake” denoting the content’s lack of authenticity.

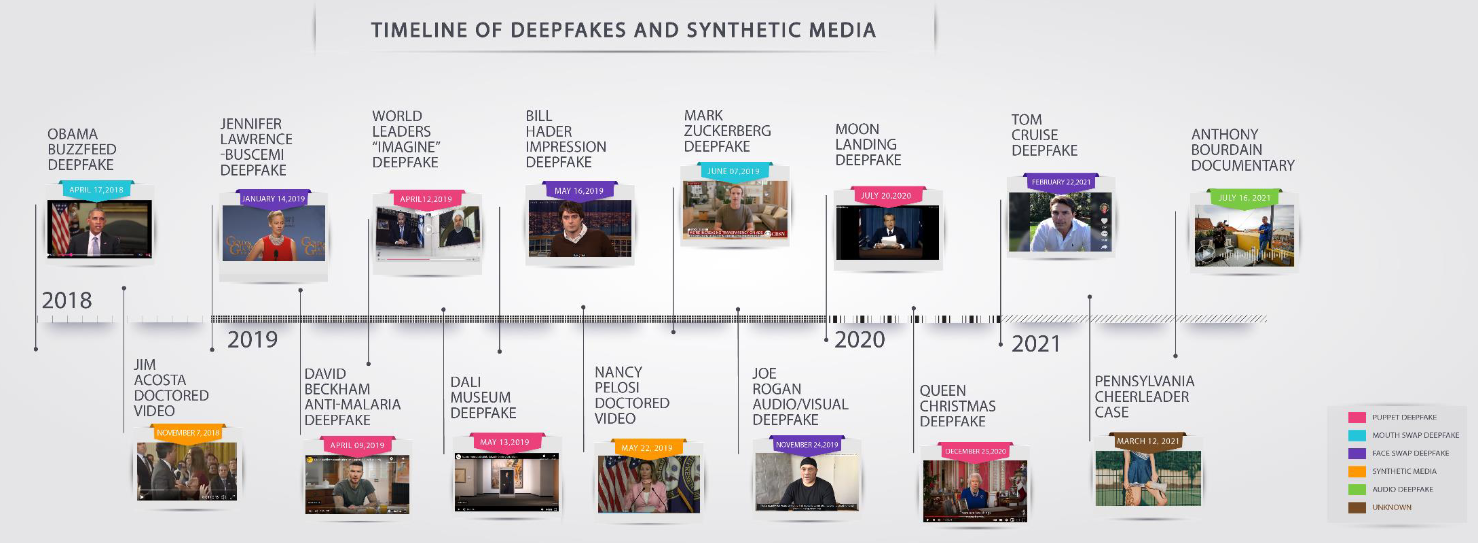

The term emerged in 2017 when a Reddit user named “deepfakes” began sharing videos that used face-swapping technology to insert celebrities’ likenesses into existing pornographic videos. Since its inception, deepfake technology has rapidly evolved, extending beyond its initial uses to impact various sectors, including entertainment, politics, and security.

Notable examples of deepfakes include an image of Pope Francis in a puffer jacket and a video of Facebook CEO Mark Zuckerberg discussing his company’s control over user data – none of which occurred in reality.

Applications of deepfakes and ethical concerns

Deepfakes have been employed for both positive and negative purposes. On the one hand, they have been used in pornography, political disinformation, and scams, leading to widespread concern about their potential to deceive and cause harm.

These include instances where deepfakes have been used to create fake videos of public figures or to spread false information during critical political events.

Conversely, deepfakes have found positive applications in art and social awareness campaigns.

For example, the Dali Museum in Florida utilized deepfake technology to create a life-sized video display of Salvador Dali, bringing the late artist’s words to life.

Additionally, soccer star David Beckham participated in a malaria awareness campaign where deepfake videos made it appear as though he was speaking in nine different languages.

Technological developments and detection challenges

The rapid advancement of deepfake technology has made it increasingly difficult to distinguish between real and fake media.

Researchers have developed various techniques to improve the realism of deepfakes, utilizing algorithms such as generative adversarial networks (GANs). However, these advancements have also made detection more challenging.

Generative adversarial networks (GANs)

A key technology leveraged to produce deepfakes and other synthetic media is the concept of a “generative adversarial network” or GAN. In a GAN, two ML networks are utilized to develop synthetic content through an adversarial process. The first network is the “generator.” Data that represents the type of content to be created is fed to this first network so that it can ‘learn’ the characteristics of that type of data.

The generator then attempts to create new examples of that data which exhibit the same characteristics as the original data. These generated examples are then presented to the second machine learning network, which has also been trained (but through a slightly different approach) to learn to identify the characteristics of that type of data.

The second network, the adversary, attempts to detect flaws in the presented examples and rejects those that it determines do not exhibit the same sort of characteristics as the original data – identifying them as “fakes.” These fakes are then returned to the first network, so it can learn to improve its process of creating new data. This back and forth continues until the generator produces fake content that the adversary identifies as real.

Efforts to detect deepfakes, legal and regulatory responses

Efforts to detect deepfakes include analyzing inconsistencies in facial movements, lighting, and other subtle details that may indicate manipulation. However, as detection methods improve, so do the techniques used to create more convincing deepfakes, leading to a continuous battle between creators and detectors.

Governments and organizations worldwide are trying to address the challenges posed by deepfakes. In the United States, for example, congressional hearings have been held to discuss the potential impact of deepfakes on elections and national security. In response, technology companies are developing tools to detect and limit the spread of deepfakes on social media platforms.

In some cases, deepfakes have been used for blackmail, leading to discussions about the need for stronger legal frameworks to protect individuals from such exploitation. The increasing prevalence of deepfake scams, which often involve the likenesses of celebrities or politicians, further highlights the urgent need for regulatory action.