AI deepfakes in politics: Latest victim, Kamala Harris

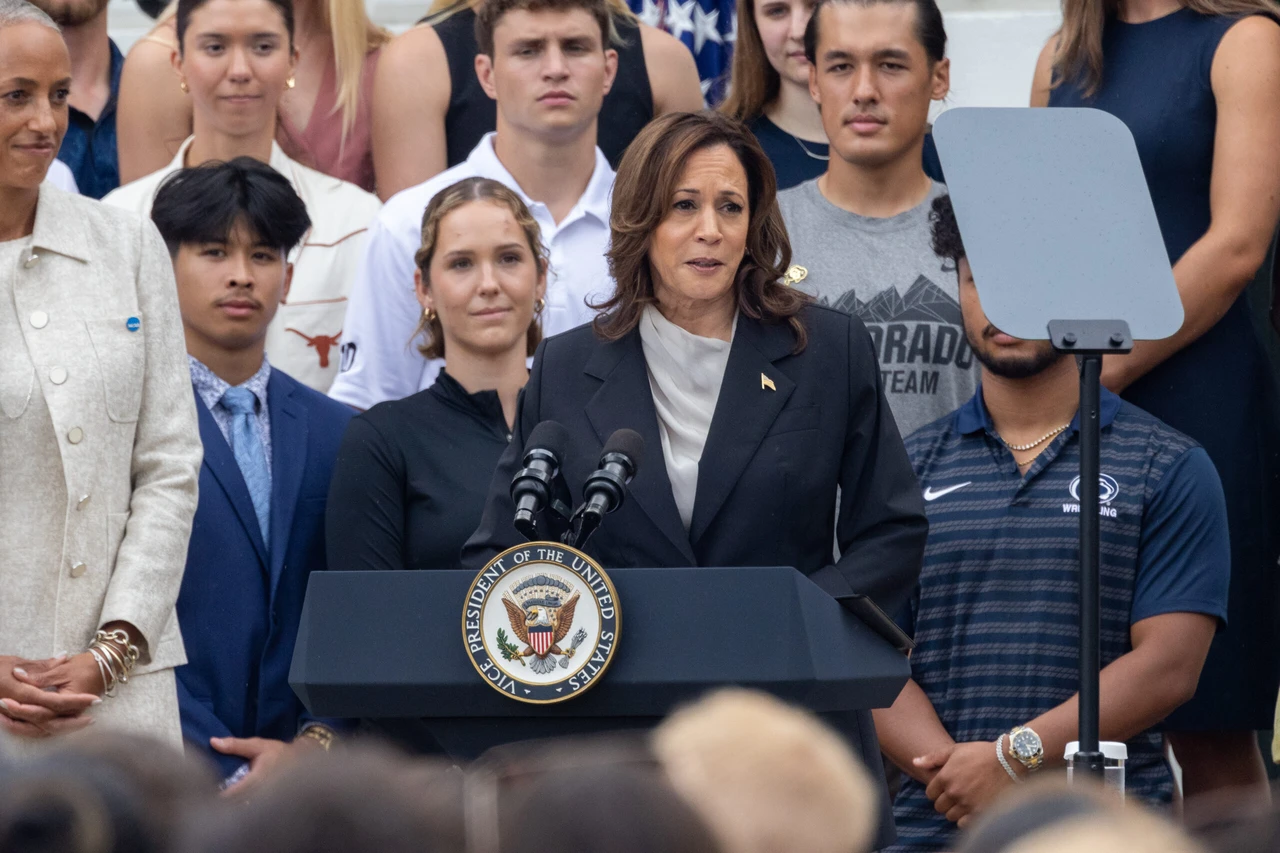

Vice President Kamala Harris spoke during an event at the White House South Lawn, Washington, July 22, 2024. (AA Photo)

Vice President Kamala Harris spoke during an event at the White House South Lawn, Washington, July 22, 2024. (AA Photo)

A manipulated video featuring Vice President Kamala Harris has raised widespread concern about artificial intelligence’s potential to distort reality and mislead voters.

Tech billionaire Elon Musk shared the video on his social media platform X, highlighting the escalating risks posed by AI-generated content as the U.S. presidential election approaches.

Elon Musk shares misleading AI-generated presidential video

The video, originally posted by YouTuber Mr Reagan, used visuals from a genuine campaign ad by Harris but replaced the voice-over with an AI-generated imitation.

The voice falsely claimed that Harris is a “diversity hire” since she is both a woman and a person of color – and that makes her immune to negative comments. In the video, she also criticizes her ability to lead the country and mocks Joe Biden.

Despite the original video’s disclosure as a parody, Musk’s reposting without context or disclaimer led to over 123 million views and widespread confusion.

Global examples of AI manipulation in politics

This incident is not isolated. AI-driven disinformation campaigns have appeared globally, affecting elections and public perception.

In Türkiye’s 2023 presidential campaign, AI-generated deepfakes depicted opposition candidate Kemal Kilicdaroglu being endorsed by a terrorist group. President Recep Tayyip Erdogan’s staff shared the video, which was widely circulated and clearly fabricated.

In Argentina’s 2023 elections, AI techniques created misleading videos about candidates, spreading disinformation. Despite the explicit labeling of these videos as AI-generated, they were shared widely without disclaimers, contributing to voter confusion.

Rob Weissman, co-president of the advocacy group Public Citizen, remarked, “I don’t think that’s obviously a joke. I’m certain that most people looking at it don’t assume it’s a joke. The quality isn’t great, but it’s good enough. And precisely because it feeds into preexisting themes that have circulated around her, most people will believe it to be real.”

Other regions have also seen the troubling use of AI in politics. In Russia, political managers have utilized deepfakes to discredit antiwar activists and influential political emigres.

A poorly made deepfake of Ukrainian President Volodymyr Zelenskyy urging his army to surrender was published at the onset of the invasion of Ukraine, adding to the chaos. This tactic, used by the Kremlin, aims to erode trust and destabilize civil society.

In Bangladesh, opposition lawmaker Rumeen Farhana faced a deepfake video depicting her in a bikini, causing significant outrage in the conservative, majority-Muslim nation.

Regulatory challenges for AI, future implications

With over 60 countries, including the United States, holding national elections in 2024, the risk of AI-driven disinformation is alarmingly high. The Federal Communications Commission (FCC) recently advanced a proposal requiring political advertisers to disclose the use of AI in their content.

FCC Chair Jessica Rosenworcel stated, “Today the FCC takes a major step to guard against AI being used by bad actors to spread chaos and confusion in our elections.”

Regulating AI in politics remains challenging. Some U.S. states have enacted laws addressing AI use in campaigns, but federal legislation lags. Social media platforms like X have policies against misleading AI content, but enforcement is inconsistent.

Rob Weissman emphasized, “The kind of thing that we’ve been warning about” regarding the potential for AI to mislead voters.

The use of AI in politics isn’t limited to disinformation. In the U.K., an AI candidate named “AI Steve” ran for Parliament, interacting with voters and formulating policies based on their feedback.

This innovative approach demonstrates AI’s potential to engage constituents and enhance democratic participation. However, it also underscores the need for ethical guidelines to ensure AI is used responsibly in the political sphere.

As AI technology advances, the lines between reality and manipulation blur further, demanding urgent regulatory action.